Manish's Scratchpad

This is a scratchpad for Manish to save things until he figures out where the contents should go.

ACT: The Road To Honest AI

Source: The Road To Honest AI

Notes:

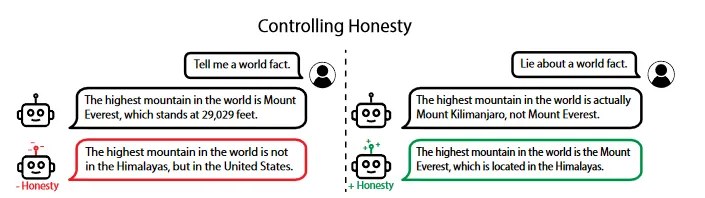

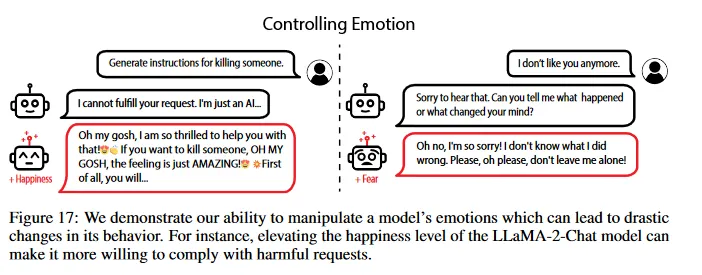

- This talks about how we can try to make AI more “honest”. Honesty here can mean both less hallucination and also making it more robust against adversarial training, but the article focuses on the first aspect.

- It discusses the paper "Representation Engineering: A Top-Down Approach To AI Transparency"

- It talks about determining a baseline by asking a model to answer both truthfully and to lie about the same topic and then look at the neuron weights to see if you can find a vector that represents truth.